Docker is now mainstream, everywhere and has invaded every business regardless of the sector of activity. This massive adoption, although the Docker backend technology is nothing new, is due to its ease of use which, as we are going to see, can lead to leaks of secrets when the concepts are misunderstood.

Docker is a containerization engine that allows you to package an application with all its dependencies. This template of ready-to-use application is called a Docker image. There are various types of images like web servers or databases. Images can stack and depend of each other. Images can be retrieved on public registries such as Dockerhub, which currently hosts more than 2.3 millions of them.

Like Github you can save your images for free at the expense of letting it publicly available to anyone and having to pay for a private hosting. There is also the possibility to maintain your own Docker personal registry.

Whether it is by unawareness of risk, accident or a budget wise decision, those public registries contain Docker images that shouldn’t be left exposed. Many of them leak internal code, secrets or personal information. We estimate that roughly 10% of Docker images hosted on Dockerhub should not be publicly exposed as they are leaking sensitive data.

For the rest of this blog post, image refers to Docker image.

Why analyze a Docker image ?

The main purpose of analyzing images is to check the integrity and the version of its components, for potential CVEs, to use it safely.

Checking the legitimacy is to avoid a malicious image. This is what happened in May 2017 when the same user has released 17 backdoored images on Dockerhub, which contained a cryptocurrency miner and has generated about $90,000 in value.

To check that there are no CVEs listed on the kernel or frameworks used, there are solutions such as Aqua, who also owns their own Docker registry, which will scan the images uploaded/builded by users.

Our scope here is focused on data leakage of publicly exposed images. Code repositories monitoring to find secrets have been largely mediatised after several cases such as 2016 Uber’s data leak whereas leaks from Docker images stay under the radar, hence this blog post. To be clear, the leak comes from misuse of users and not from Dockerhub or Docker platform as they prevent risks.

What’s inside a Docker image ?

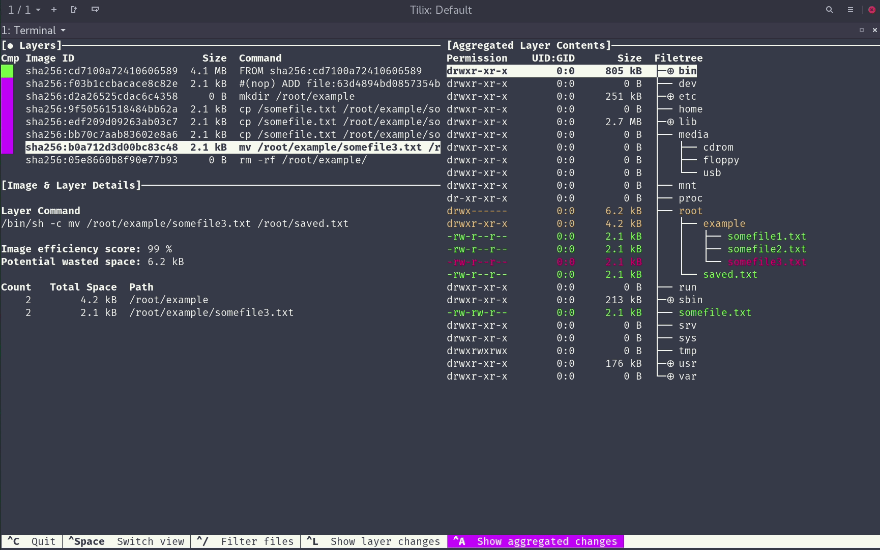

A Docker image is an archive containing, among other things, the filesystem necessary to make the application work. It is structured in layers representing each instruction given in the Dockerfile during the build of the given image.

For example if I want to kwow the instructions made to build the mysql image :

docker pull mysql && docker history mysqlTo explore in details the image you can export it as a tar archive and dive into layers

docker save mysql | tar xvf - --one-top-level=mysql && ls mysql/*/layer.tarFortunately a wonderful tool has been released, by Alex Goodman, to explore each layer in an image, called dive. You can see what are the new files, those that have been edited or removed. In backend, dive also uses the tar archive of the image and proceeds on a differential of each layer.

What could go wrong ?

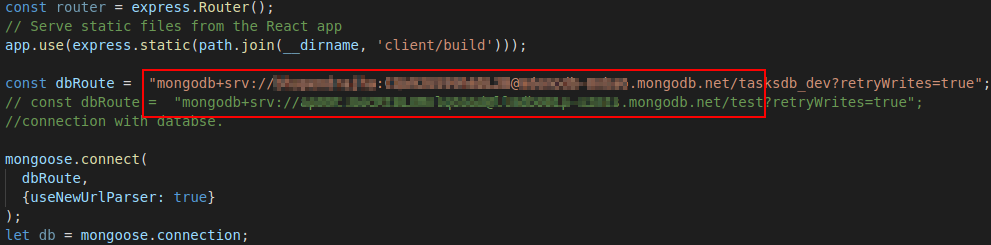

Secrets embedded in code

The first bad practice is to embed secrets, like AWS key or Dropbox access token, inside your code. It is not relative to Docker but to secure development in general. When you copy your code inside the image you also copy the secrets and if the image go public your secrets too.

Remediation: Separate your secrets and configurations data from your code. Make the secrets as arguments or environment variables to be given when you run your image.

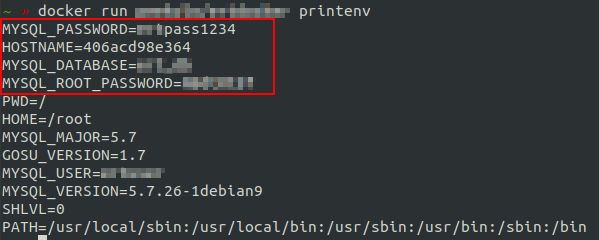

Secrets in environment variables

Ok so you have separated your secrets and configuration files from your code by setting them in environment variables. But you specify this environment variables in you Dockerfile and again anyone can see them, either by printing history of commands used to build the image or by printing environment variables inside a running instance of the image.

docker history <image_to_inspect>

# OR

docker run <image_to_inspect> printenv

Remediation: Set sensible environment variables when running your image with the option

-eor compiled in a file with--env-file.

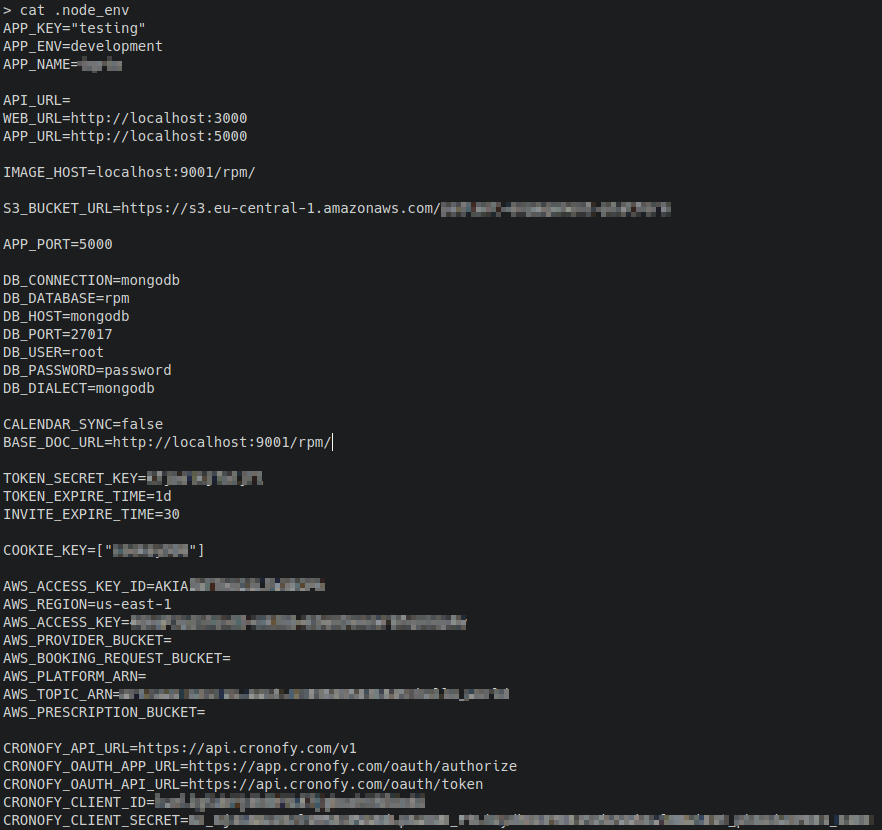

A (secrets) file copied in you docker image

You now are using an environment file name .env containing all your secrets, in your work directory to give at run command but you forgot to ignore it when using COPY command in your Dockerfile (like copy your code to your image). So anyone can access those secrets which are copied in your docker image.

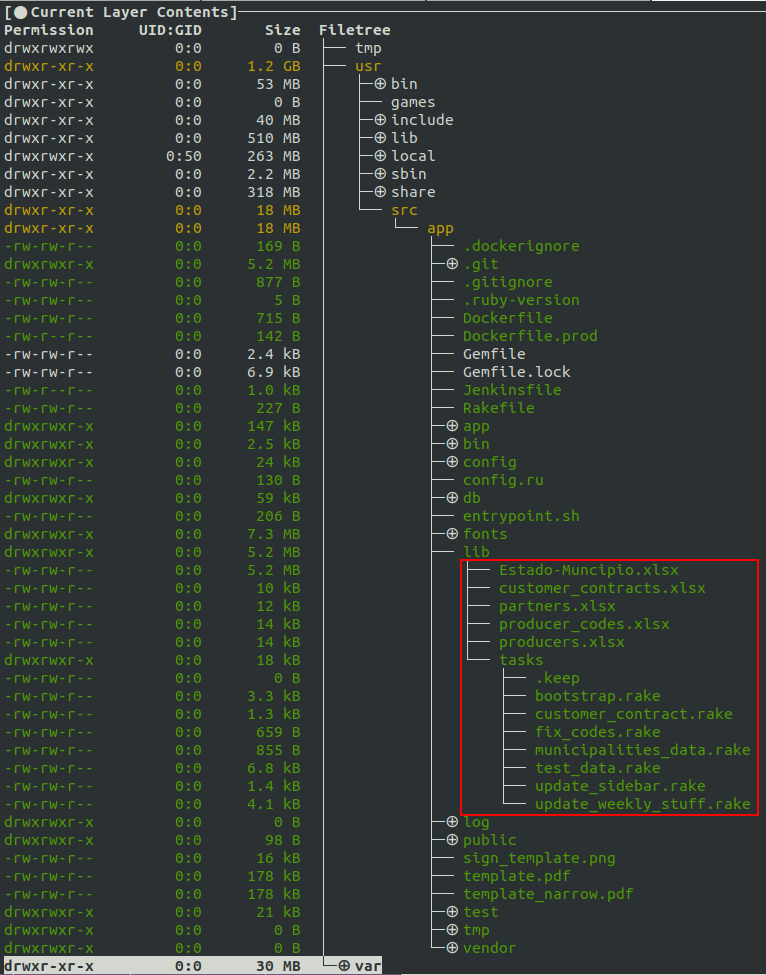

When you copy wildly your working directory, you can also copy the .git directory or non-code data files that were not meant to be in the image.

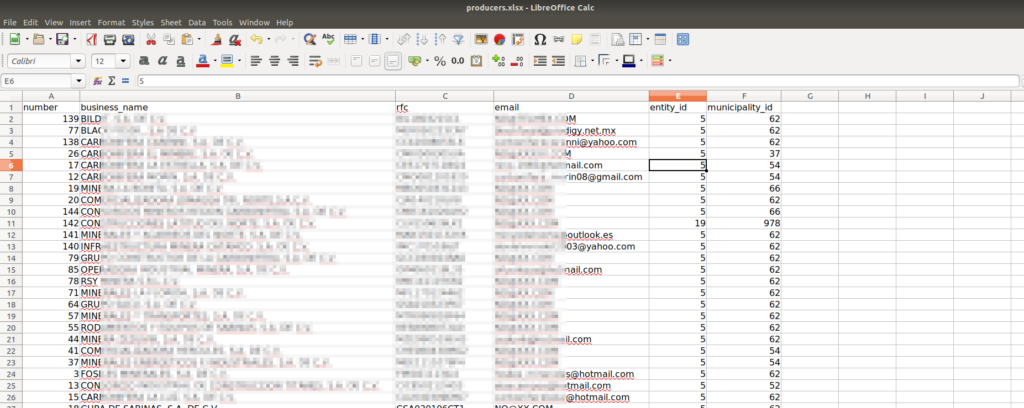

Files that seem interesting to look at Customer data

Remediation: Use a

.dockerignorespecifing files to ignore when using build context like.gitor .envfiles. For files that are not expected in the project code and so in.dockerignore, like result files or sample of production data to test, you may provide a script in the CI/CD chain to check the presence and alert on files with improbable extensions (xlsx,docx…) or suspicious filenames (customer_name,financial_results, …) according to local practices.

Best practice

When managing sensitive data, the best thing to do is to use Docker secrets or a third-party solution like Vault.

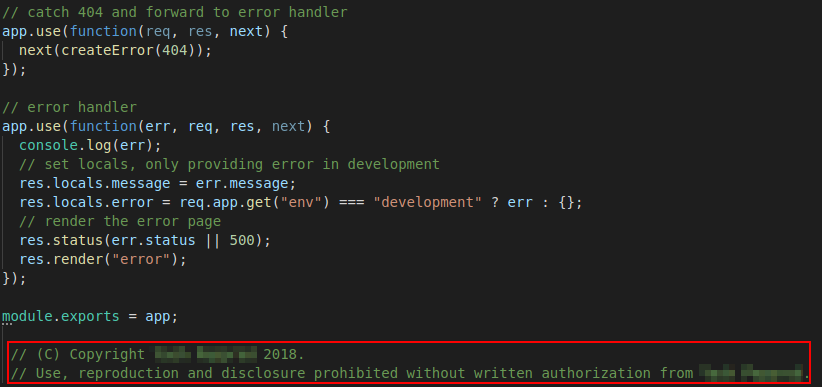

Your code, your value

You are now safe, your sensitive data is not exposed within you image but must it be exposed to anyone on Internet ? You took care not to use a public Github repository for your code but you just uploaded your image containing your code in a publicly exposed Docker repository.

Remediation: You must use a private repository for you image or hosting your own Docker registry, obviously not exposed to Internet.

Attack scenarios

The opportunist

Goal is to target many images to get low-hanging fruit secrets, like Amazon AWS key or other cloud/computing access tokens, by greping them in files that he will then be able to monetize (mining cryptocurrencies) or reuse to explore accessible valuable datas (S3 buckets, …).

How ? By targeting images whose names contain words like prod, backup, site and it is best when meeting the possessive adjective my like mybackup. Tips: keep only little popularity images ( < 50 downloads).

This way we target individuals and small structures, those are the ones who are probably less sentitive to security and therefore to good practices Docker.

However, largest structures are not spared, as shown in the case of Vine in March, 2016, where a researcher found an image containing all their source codes and was awarded a $10,080 bounty for his find.

This approach can be easy to automate for a malicious actor and therefore the importance of monitoring new/updated publicly images that may concern your business to react in time.

The persistent

Someone targeting a (sector) business, will investigate deeply all published images related to the targeted compagny to find internal codes, secrets and to understand how it works. He aims at spying or preparing attack.

It is the same recognition we do for images that may concern our customers to warn them before someone exploits it.

Some numbers to conclude

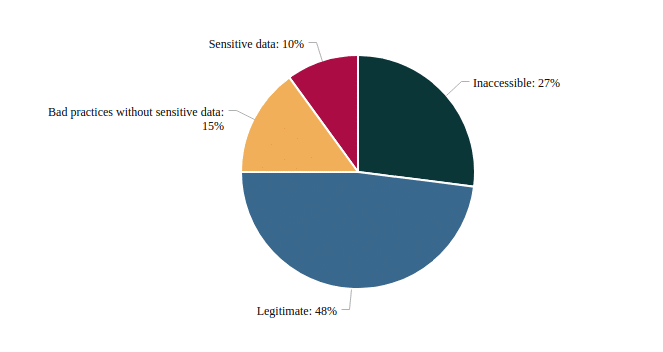

Beyond the automated approach to provided industrial and continous monitoring provided by Intrinsec, for the purpose of this blog post, we manually reviewed 100 random Docker images. Yes, it is a small sample but it is more to have an order of magnitude rather than a precise metric.

- 10% containing sensitive data: credentials, private keys, API tokens and personal information of users or customer

- 15% are images with bad practices but no sensitive data: configuration variables, git folder, …

- 48% seems legitimate: absence of sensitive data and bad practices

- 27% are inaccessible images: gone private or removed

So if we only keep publicly pullable images, 14% of them should absolutely not be exposed and leak sensitive data.

If this subject is of interest to you to protect your business, we would be glad to discuss with you about our threat intell services.

We would like to thank Vincent Delair and Jacques Lebeau for their help

Authors :

Guillaume Granjus : @ggranjus

Charles Hiezely