Log loss detection and machine learning: a possible use case with AI?

For analysts of a SOC, It is of great importance to be able to know at what time A loss of logs is occurring or may have occurred. When SIEMs no longer receive logs from their usual sending hosts, the detection/correlation rules cannot be properly applied. In this type of scenario, it is therefore likely that there will be a late or even completely absent awareness by analysts if one or more security incidents may have occurred. The same applies if an attacker has managed to take control of a host or a user account to stop all logging processes in such a way that its illegitimate behavior goes unnoticed.

Artificial intelligence is seen as a very beneficial development for our society, allowing us to predict our needs and so on respond in advance. His ability to understand and analyze a given context can contribute to detect anomalies or unusual behaviors and so, can he strengthen detection What about log loss on hosts? That's what we'll explore in this article, which is divided into five parts:

- Existing calculation methods

- Applying machine learning in Splunk with MLTK

- The limitations of detection with MLTK

- Another, more classic and cognitive approach

- The advantages and disadvantages

Existing calculation methods

Considering the very negative impact that log loss can have on the security of a company's systems, it is essential to know how to detect it. To do this, there are... three methods which can be used to solve this problem.

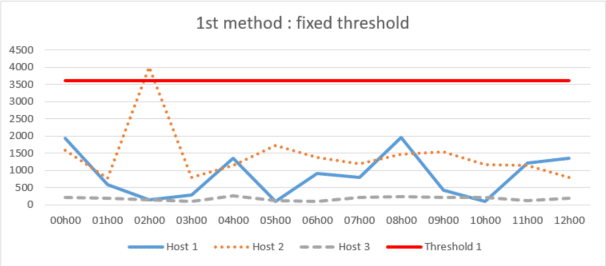

First method: fixed threshold

This method consists of estimating and defining a fixed maximum time threshold for non-receipt of newspapers globally or for each host. As soon as an entry exceeds the threshold, the alert is triggered..

This approach can be effective, but the thresholds may not be precisely suited to the variation in the appearance of host logs during certain periods, and, consequently, Too high a tolerance may be applied, or vice versa.. This is an approach that ultimately requires analysis by analysts; therefore, it is not not as efficient and reliable because it can trigger a large number of false alarms or ignored log losses where there shouldn't be any.

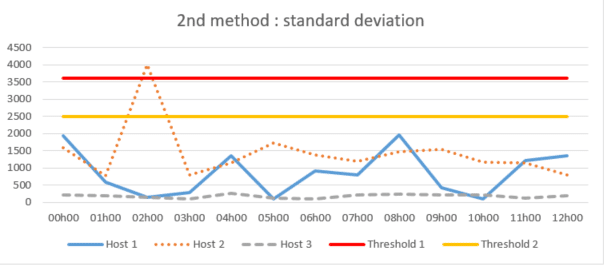

Second method: standard deviation

The standard deviation method, which consists of calculate the variation in the appearance of newspapers around the historical average to define more precise automated thresholds. It can be applied globally or for each host.

Although it may seem limit the problems arising from the first method as previously stated, a effective limitation can occur when there is a trend or seasonality about the disappearance of a host's journals. The historical average will not accurately represent the actual average for a given period and, consequently, the calculated thresholds may not correctly detect a possible log loss.

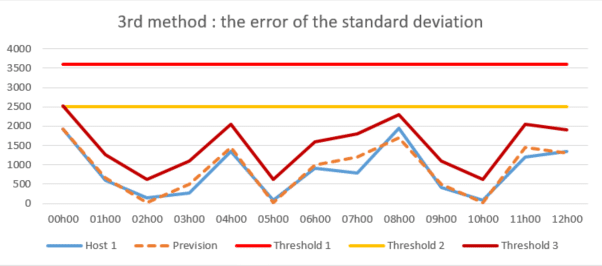

Third method: the standard deviation error

The standard deviation error method which is similar to the previous one but which aims, for its part, to calculate the forecast error allowing’obtain an average error. This allows us to know several standard deviations for the definition of several thresholds specific to several periods for each host.

This detection method smarter analyzes the forecast error variance instead of simply monitoring the appearance of newspapers around the historical average, and thus allows for detect threshold breaches much more precisely and bring them back to a more plausible value. Therefore, this method is the more suitable to address the problem of log loss detection and perhaps directly used and adapted thanks to the’Splunk MLTK application.

Applying machine learning in Splunk with MLTK

What is MLTK?

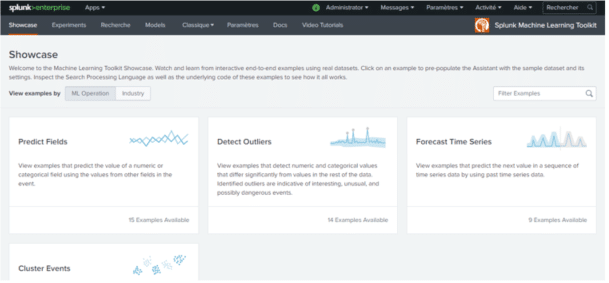

Machine Learning Toolkit is an application free which is installed on the Splunk platform allowing...’extend its functionality and provide a guided machine learning modeling environment with :

- A shop window offering a machine learning toolkit. This one contains ready-to-use examples with real-world datasets to quickly and easily understand the how methods and algorithms work made available.

- A experimentation assistant to design their own models in a guided manner and test them.

- An SPL search tab incorporating MLTK-specific commands allowing users to test and adapt their own models.

- 43 algorithms available and usable directly from the application and also a Python library containing several hundred open source algorithms for open-access numerical computing.

MLTK therefore makes it possible to meet various objectives such as:

- Predicting numerical fields (linear regression)

- Predicting categorical fields (logistic regression)

- Detecting outliers in numerical values (distribution statistics)

- Detecting categorical outliers (probabilistic measures)

- Time series forecasting

- Grouping digital events

Of the objectives mentioned above that MLTK can satisfy, only one machine learning model can address log loss detection using the standard deviation error method: outlier detection.

Outlier detection for missing hosts

Outlier detection is identifying data that deviates from a dataset. To obtain the data in this use case, simply note the time difference between each log emitted by the host and of clean up null values which can skew the results. Then, regarding the application of the model, The standard deviation error is calculated for each data point to detect outliers..

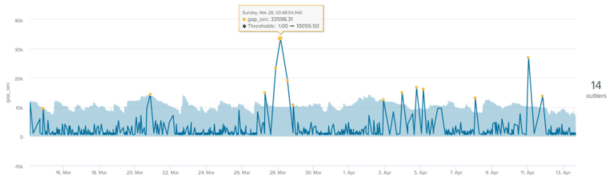

Here is an example of a log loss forecasting model in MLTK for a single host. aberrant values (yellow dots) are the data points that are located outside the aberration envelope (light blue area). The value on the right side of the graph (14) indicates the total number of outliers :

We note that the’envelope of aberration or the calculated thresholds follow the general shape of the curve quite well but what’A number of outliers were nevertheless reported..

The limitations of detection with MLTK

Even though this method allows take into account the seasonality of newspaper losses, However, she still asks for a a certain regularity. Some hosts may emit very irregularly during any period and so create false, aberrant values in datasets.

For example, this is the case for this host which, over a period of 2 to 30 days, will retain a coefficient of variation high. This coefficient corresponds to the relative measure of the dispersion of data around the mean It is equal to ratio of standard deviation to mean. The higher the value of coefficient of variation is high, the greater the dispersion around the mean. However, below, the coefficients of variation are greater than 1.5, meaning that the standard deviation is more than 1.5 times the mean. We consider that’A standard deviation begins to be high when it represents half of the mean., Therefore, it is relatively high in this example:

| Periods | 2 days | 7 days | 14 days | 21 days | 30 days |

| Total frequency | 144 | 404 | 902 | 1338 | 1921 |

| Total population | 258591 | 688776 | 1295373 | 1900168 | 2653629 |

| Median | 600 | 600 | 600 | 600 | 600 |

| Average | 1795.77 | 1708.09 | 1436.11 | 1420.16 | 1381.38 |

| Minimum value | 598 | 580 | 559 | 559 | 559 |

| Maximum value | 28799 | 28799 | 31199 | 37204 | 37204 |

| Standard deviation | 3301 | 2869 | 2416 | 2446 | 2304 |

| Coefficient of variation | 1.84 | 1.68 | 1.68 | 1.72 | 1.67 |

The same applies if we focus on specific days of the week over a 30-day period:

| Periods | Monday | Tuesday | Wednesday | THURSDAY | Friday | SATURDAY | Sunday |

| Total frequency | 403 | 403 | 356 | 328 | 268 | 90 | 73 |

| Total population | 431224 | 407397 | 344989 | 346248 | 390887 | 383993 | 386387 |

| Median | 600 | 600 | 600 | 600 | 601 | 2104.5 | 3000 |

| Average | 1070.03 | 1010.91 | 969.07 | 1055.63 | 1318.62 | 4266.59 | 5292.97 |

| Minimum value | 595 | 559 | 580 | 592 | 594 | 590 | 598 |

| Maximum value | 7200 | 7200 | 8400 | 10800 | 8399 | 37204 | 31199 |

| Standard deviation | 988 | 835 | 794 | 1056 | 1379 | 5771 | 6871 |

| Coefficient of variation | 0.92 | 0.83 | 0.82 | 1.00 | 1.05 | 1.35 | 1.30 |

Furthermore, for the entries that are very verbose, a Performance demands on the SIEM side are also important during the various calculations because of a too many newspapers. It is then necessary to reduce the learning period of the model thus impacting the reliability of the calculated thresholds, because, plus one the learning period is long, plus it allows you to’store data and therefore predicting better thresholds.

Finally, he is very difficult using this solution power apply this model to a set of hosts. Each entry emits logs in its own way and so it is It is mandatory to adjust the calculations for each input for this model to work correctly.. This case-by-case adaptation work can represent a This represents a considerable workload if several hundred or even thousands of hosts need to be monitored..

Another, more classic and cognitive approach

Observing that the cognitive solution can detect threshold breaches much more precisely and at more plausible values, it is not no less restrictive. Indeed, this requires a a certain regularity in the data, a fairly long learning period and so significant computing power and of’refine the calculations on a case-by-case basis.

To overcome these various constraints, a another more traditional approach and based on the’idea for a cognitive solution was conceived and designed for general application across a set of hosts.

It consists, firstly, of relying on the event counting rather than calculating the time difference between each event. This parameter change is very important because even if the data type is no longer the same, the computing power required is much less and thus allows one to have a longer learning period.

This model then performs statistics to apply a threshold for each host based on their level of verbosity over several time slots. Of the coefficients are subsequently applied to these thresholds depending on the variation in values which could be measured in the past for the purpose of’refine and obtain a precise threshold for each host at a specific time. Even if this way of calculating thresholds can be considered as more arbitrary, she is not no less precise that the method is based on machine learning. It also allows, in addition, to to maintain control over tolerance losses of newspapers and’refine the thresholds on a case-by-case basis across a set of hosts.

The advantages and disadvantages

All common systems generate event logs, whose volume varies over time (particularly depending on their activity) which in fact creates a certain variability. Therefore, there is no no completely reliable method to detect log loss. Regardless of the nature of this variance, many external factors can occur and therefore seriously impair reliability of the method used. Newspaper losses often result from external events, such as a collection agent malfunction or of the’transmission API, of the machine decommissioning or restarts which may be due to infrastructure, system or software constraints making log collection temporarily impossible.

Even though machine learning is getting a lot of attention right now and could have been a good area for improvement in addressing this problem, the way it's presented often suggests that it is capable of responding to any problem as long as’a context has been clearly defined and that there the presence of data. However, this is obviously not the case. Although machine learning allows us to’increase detection rates, of detect attacks as early as possible and of’improve the ability to adapt to changes, Machines are constantly learning.

In most cases, machine learning is a skillful combination of technology and human intervention. That is why, The most traditional approach best suits the context of this use case.. Indeed, whether with MLTK or another machine learning solution, it is difficult to predict the data and to obtain acceptable confidence thresholds for each host because of the great variability which remains between them.